Building the Future of AI Together

A friendly thought

5 min read

As the internet evolves, so too do the ways in which we access and interact with information. In the earliest days of the web, automated bots played a simple, well-understood role: indexing sites for search, checking links, or scraping data according to clear rules set by website owners.

But with the rise of AI-powered assistants and user-driven agents, the boundary between what counts as "just a bot" and what serves the immediate needs of real people has become increasingly blurred.

Modern AI assistants work fundamentally differently from traditional web crawling. When you ask Definable AI a question that requires current information—say, "What are the latest reviews for that new restaurant?"—the AI doesn't already have that information sitting in a database somewhere. Instead, it goes to the relevant websites, reads the content, and brings back a summary tailored to your specific question.

This is fundamentally different from traditional web crawling, in which crawlers systematically visit millions of pages to build massive databases, whether anyone asked for that specific information or not. User-driven agents, by contrast, only fetch content when a real person requests something specific, and they use that content immediately to answer the user's question. Definable AI's user-driven agents do not store the information or train with it.

The difference between automated crawling and user-driven fetching isn't just technical—it's about who gets to access information on the open web. When search engines crawl to build their indexes, that's different from when they fetch a webpage because you asked for a preview. User-triggered fetchers prioritize your experience over restrictions because these requests happen on your behalf.

The same applies to AI assistants. When Definable AI fetches a webpage, it's because you asked a specific question requiring current information. The content isn't stored for training—it's used immediately to answer your question.

When companies mischaracterize user-driven AI assistants as malicious bots, they're arguing that any automated tool serving users should be suspect—a position that would criminalize email clients and web browsers, or any other service a would-be gatekeeper decided they don't like.

This controversy reveals that some systems are fundamentally inadequate for distinguishing between legitimate AI assistants and actual threats. If you can't tell a helpful digital assistant from a malicious scraper, then you probably shouldn't be making decisions about what constitutes legitimate web traffic.

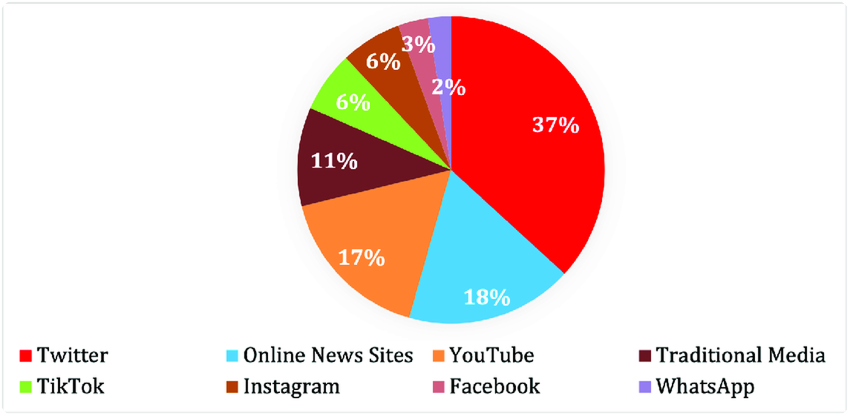

This overblocking hurts everyone. Consider someone using AI to research medical conditions, compare product reviews, or access news from multiple sources. If their assistant gets blocked as a "malicious bot," they lose access to valuable information.

The result is a two-tiered internet where your access depends not on your needs, but on whether your chosen tools have been blessed by infrastructure controllers, who will care more about your means. This undermines user choice and threatens the open web's accessibility for innovative services competing with established giants.

An AI assistant works just like a human assistant. When you ask an AI assistant a question that requires current information, they don't already know the answer. They look it up for you in order to complete whatever task you've asked.

On Definable AI and all other agentic AI platforms, this happens in real-time, in response to your request, and the information is used immediately to answer your question. It's not stored in massive databases for future use, and it's not used to train AI models.

User-driven agents only act when users make specific requests, and they only fetch the content needed to fulfill those requests. This is the fundamental difference between a user agent and a bot.

The internet was built on the principle of open access to information. As AI assistants become more sophisticated, we must ensure that principle remains intact. This means:

Clear Standards: Establishing industry-wide standards that distinguish between automated crawlers and user-driven agents.

User Rights: Protecting users' rights to access information through their chosen tools and assistants.

Technical Accuracy: Ensuring that infrastructure providers properly understand and categorize different types of web traffic.

Open Communication: Encouraging dialogue between AI platforms, infrastructure providers, and website owners.

The future of the internet depends on our ability to adapt to new technologies while preserving the core values of accessibility and user choice. User-driven AI assistants represent a natural evolution of how people access information—not a threat to the open web, but a continuation of its promise.

Definable AI is committed to responsible AI that serves users' needs while respecting the infrastructure of the open web. Our user-driven agents:

We believe in an internet where users have the freedom to choose the tools that best serve their needs, and where AI assistants work as true partners in accessing and understanding information.

The distinction between bots and agents isn't just technical—it's fundamental to the future of user autonomy on the open web. And Definable AI stands firmly on the side of user choice, transparency, and responsible innovation.