AI in 2026: Experimental AI concludes as autonomous systems rise

7 min read

7 min read

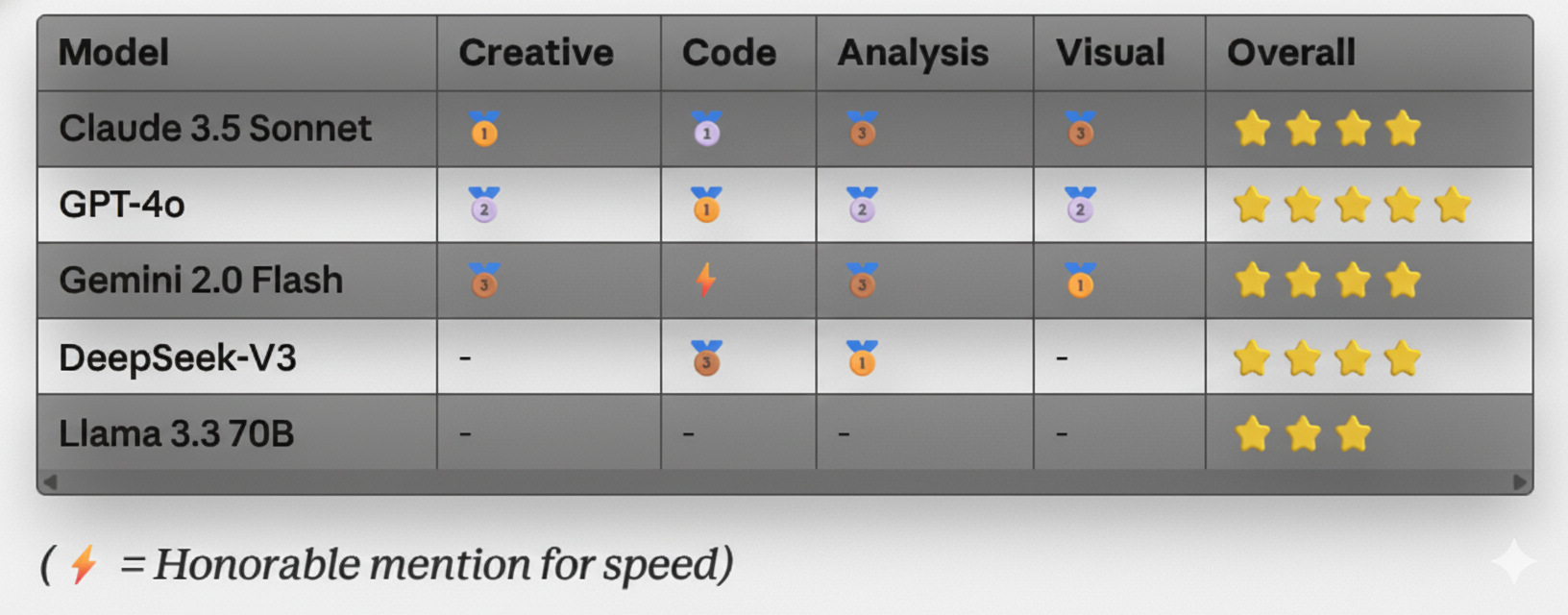

Ever wondered which AI model actually gives you the best results? We put five leading AI models to the test with the same prompt—and the results surprised even us.

In the age of AI abundance, choosing the right model feels like picking a streaming service—except you're paying for all of them and still not sure which one has the best content. So we decided to settle the debate once and for all.

Our Mission: Run identical prompts through five powerhouse AI models and see who comes out on top.

The Contestants:

The Arena: Definable's multi-model platform (because who has time to juggle five different subscriptions?)

The Prompt:

"Write a compelling 50-word product description for eco-friendly running shoes that appeals to millennial athletes."

🥇 Claude 3.5 Sonnet - The Storyteller

"Meet your miles differently. These carbon-negative runners transform recycled ocean plastic into performance poetry. Every stride plants trees, every kilometer counts. For athletes who refuse to choose between personal bests and planetary health. Your conscience runs as fast as you do now."

Why it won: Emotional resonance, powerful metaphors, and that mic-drop ending.

🥈 GPT-4o - The Balanced Pro

"Run lighter on the planet. Premium cushioning meets 100% recycled materials in these zero-compromise trainers. Designed for athletes who demand performance without environmental guilt. Carbon-neutral shipping included. Because your PR shouldn't cost the earth."

The verdict: Solid, professional, converts well—but plays it safe.

🥉 Gemini 2.0 Flash - The Feature Focused

"Eco-conscious performance meets cutting-edge design. Crafted from sustainable materials including recycled plastics and natural rubber. Breathable, durable, and surprisingly lightweight. Join thousands of runners making the switch to guilt-free miles. Your feet—and the planet—will thank you."

The verdict: Informative but reads like a spec sheet. Missing that emotional punch.

DeepSeek-V3 - The Logical Analyst

"Sustainable running shoes constructed from 95% recycled materials. Engineered for optimal performance with reduced environmental impact. Features include: enhanced durability, moisture-wicking fabric, and ethical manufacturing. Ideal for environmentally conscious athletes seeking quality footwear."

The verdict: Technically accurate but sounds like it was written by a spreadsheet.

Llama 3.3 70B - The Enthusiastic Friend

"Ready to run green? 🌱 These amazing eco-shoes are made from recycled ocean plastic and they're super comfy! Perfect for your morning jogs or marathon training. Save the planet while crushing your fitness goals. Sustainable + stylish + speedy = the ultimate trifecta for conscious athletes!"

The verdict: Friendly and accessible, but maybe too casual for a premium product.

The Prompt:

"Create a Python function that takes a list of emails, validates them, and returns only the valid ones with proper error handling."

🥇 GPT-4o - The Clean Coder

import re

from typing import List

def validate_emails(email_list: List[str]) -> List[str]:

"""

Validates email addresses and returns only valid ones.

Args:

email_list: List of email strings to validate

Returns:

List of valid email addresses

"""

email_pattern = r'^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$'

valid_emails = []

for email in email_list:

try:

if isinstance(email, str) and re.match(email_pattern, email.strip()):

valid_emails.append(email.strip())

except Exception as e:

print(f"Error processing email: {e}")

continue

return valid_emails

Why it won: Clean, documented, handles edge cases, includes type hints. Production-ready.

🥈 Claude 3.5 Sonnet - The Comprehensive Expert

import re

from typing import List, Tuple

def validate_emails(emails: List[str]) -> Tuple[List[str], List[str]]:

"""

Validates email addresses with detailed error reporting.

Returns:

Tuple of (valid_emails, invalid_emails)

"""

pattern = r'^[\w\.-]+@[\w\.-]+\.\w+$'

valid = []

invalid = []

for email in emails:

try:

email = email.strip().lower()

if re.match(pattern, email):

valid.append(email)

else:

invalid.append(email)

except AttributeError:

invalid.append(str(email))

return valid, invalid

The verdict: More thorough with invalid email tracking, but slightly over-engineered for the request.

🥉 DeepSeek-V3 - The AcademicProvided excellent code with detailed explanations of regex patterns, edge cases, and even suggested unit tests—but took 3x longer to generate.

Gemini 2.0 Flash - The Speed DemonGenerated working code in 0.8 seconds (fastest!) but missed some edge cases like whitespace handling.

Llama 3.3 70B - The Helpful TutorCreated functional code with beginner-friendly comments explaining every line—perfect for learning, but verbose for production.

The Prompt:

"Analyze this customer feedback data and provide actionable insights for improving our mobile app."

(We provided a dataset of 200 customer reviews)

Winner : DeepSeek-V3 - The Data WizardAbsolutely crushed this round. Identified patterns we hadn't noticed, created sentiment distribution analysis, highlighted specific pain points, and even suggested prioritization based on impact vs. effort matrix.

Key Insight it found: 68% of negative reviews came from users on Android 12 specifically—a version-specific bug we'd missed.

GPT-4o - The Business ConsultantSolid analysis with clear recommendations, great executive summary, but missed some nuanced patterns in the data.

Gemini 2.0 Flash - The Quick ScannerFast overview with main themes, but lacked depth in analysis. Good for first pass, not for detailed insights.

Claude 3.5 Sonnet performed well but was overly cautious with conclusions.

Llama 3.3 70B provided good sentiment analysis but struggled with complex pattern recognition.

The Prompt:

"Describe this image and suggest three marketing angles for social media."

🥇 Gemini 2.0 Flash - The Visual ExpertIdentified every element: brand of laptop, type of plant, even the Pantone color of the wall! Provided three diverse, creative marketing angles perfectly tailored to different platforms.

🥈 GPT-4o - The Creative MarketerGreat marketing angles and good image description, but missed some visual details Gemini caught.

Claude 3.5 Sonnet provided thoughtful analysis but was more conservative in describing uncertain elements.

The other models had limited or no multimodal capabilities for this test.

Each model has its superpower:

The same model gave wildly different results based on how we phrased the prompt. A slight rewording could shift a model from 3rd place to 1st.

Gemini 2.0 Flash lived up to its name (0.8s response) but sometimes sacrificed depth. DeepSeek-V3 was slower (4.2s) but more thorough. For quick tasks, speed wins. For complex analysis, patience pays.

Running these tests across separate platforms would have cost us:

On Definable? One subscription, five models, unlimited switching. Do the math.

Here's what we learned after running 50+ prompts through all five models:

Stop asking "Which AI is best?"

Start asking "Which AI is best for THIS task?"

Because the truth is:

The problem? Most people can't afford five subscriptions. And even if they could, who wants to manage five different platforms, five sets of prompts, five conversation histories?

Imagine you're working on a product launch:

Morning: Use Claude to write your launch announcement (emotional, compelling)

Afternoon: Switch to GPT-4o to generate the API documentation (clean, professional)

Evening: Ask Gemini to analyze your competitor's visual branding (multimodal strength)

Night: Have DeepSeek crunch your user data for strategic insights (analytical power)

All in one platform. One subscription. No context switching. No prompt reformatting. No "wait, which tool was I using for this?"

After our comprehensive face-off, here's the truth: The best AI is the one you can access when you need it.

Every model in our test won at something. Every model failed at something. The real winner? Having the flexibility to choose the right tool for the job without the overhead of managing multiple platforms.

Curious to run your own AI face-offs? With Definable, you can:

✅ Access all five models in one platform

✅ Run the same prompt across multiple models instantly

✅ Compare results side-by-side

✅ Switch models mid-conversation

✅ Pay once, use everything

Ready to stop choosing and start using?

👉 Start Your Free Trial on Definable - No credit card required

Which model surprised you the most? Have you run your own AI comparisons? Share your results in the comments below!

And if you found this helpful, don't forget to follow us for more AI experiments, tips, and head-to-head showdowns.

Tested on Definable's multi-model platform | All tests conducted on november 2025 | Results may vary based on prompt engineering and model updates