AI in 2026: Experimental AI concludes as autonomous systems rise

7 min read

August 10, 2025. 8 min read

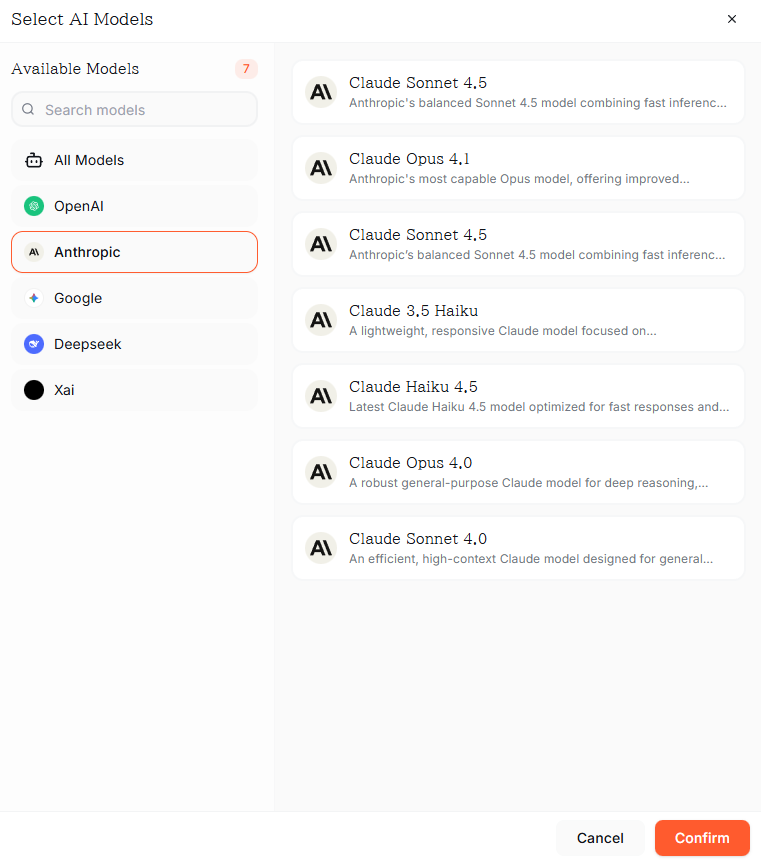

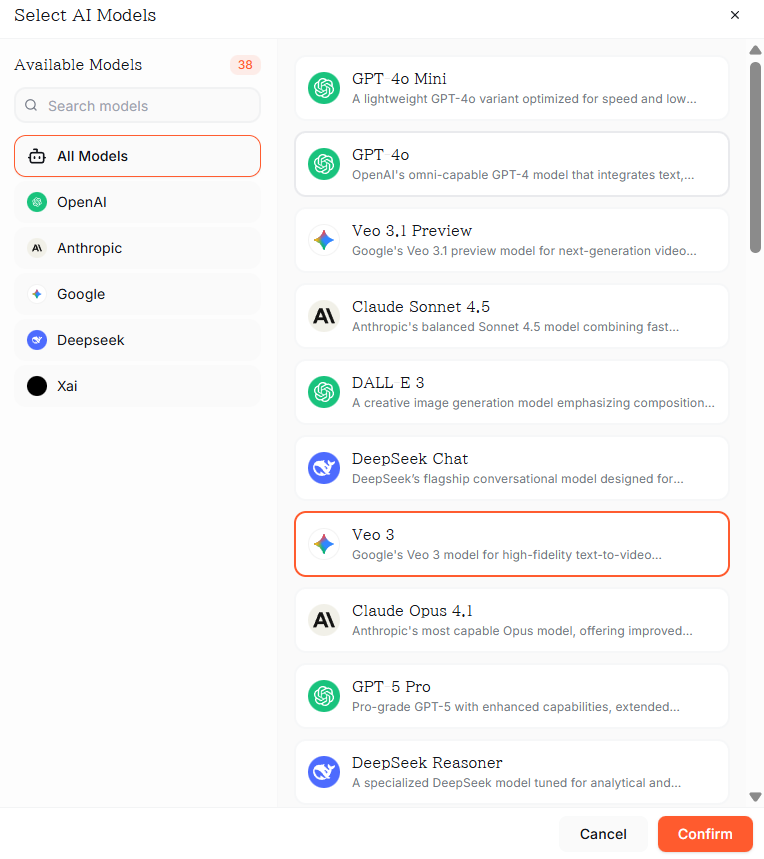

Most developers stick to one AI model at a time, ping-ponging between tools like they're switching TV channels. But what if you could orchestrate five different AI brains — including Claude — working together on the same problem? Welcome to Definable, where Claude isn't flying solo anymore.

Here's the uncomfortable truth: you're probably using Claude the way everyone uses AI — one model, one task, one limitation at a time. Need creative writing? Claude. Want coding help? Maybe you switch to another model. Looking for data analysis? Back to Claude, hoping it nails it this time.

This is what I call "model monogamy" — and it's holding you back.

The problem isn't Claude. Claude is phenomenal at reasoning, nuanced conversation, and handling complex context. But every AI model has strengths and blind spots. Claude might excel at explaining your gnarly database schema, but another model could generate that SQL query faster. One model writes beautiful prose; another crushes mathematical proofs.

The game-changer? Definable lets you use Claude alongside GPT-4, Gemini, Llama, and Mistral — all in one workspace. It's like having a dev team where each member brings different superpowers to the table.

Definable isn't another AI chat wrapper. It's a multi-model orchestration platform where you can:

In short: Claude becomes even more powerful when it's part of an ensemble, not a solo act.

Here's who's on the roster and what they bring to the party:

Claude is your go-to for:

When to tap Claude in Definable: Architecture decisions, explaining legacy code, writing documentation, customer-facing content.

Still the jack-of-all-trades. Use it for:

When to use: Initial ideation, rapid iteration, when you need "good enough, fast."

Google's model shines at:

When to use: Research-heavy tasks, data analysis, fact-checking Claude's creative suggestions.

Meta's model is great for:

When to use: High-volume, lower-complexity tasks; processing logs; sentiment analysis.

European efficiency meets AI:

When to use: International projects, code snippets, when you need speed without sacrificing quality.

You need to build a new user authentication system. Here's how Definable's multi-model approach destroys the old way:

Old way (Claude solo):

Definable way:

Total time: 17 minutes. Quality: Way higher. Coffee consumed: Same. ☕

You're writing a technical blog post about microservices.

Definable orchestration:

Result: A post that reads like Claude wrote it (because it mostly did), but with multi-model verification and enhanced technical depth. Your readers think you have a research team. You have Definable.

You've got a hairy production bug. Logs are cryptic. Stack trace is useless.

Definable investigation:

You just went from "WTF is breaking" to "Here's exactly why and how to fix it" in under 15 minutes, using each model's strength.

Can't decide which solution is best? Ask all 5 models the same question and compare. It's like Stack Overflow, but the experts actually agree on something.

Example:

"What's the best state management solution for a React app with real-time collaboration?"

Now you have 5 perspectives. Pick the one that fits YOUR context best, or combine ideas. Suddenly you're not trusting one AI's bias — you're making an informed decision.

Use faster models to generate raw output, then have Claude polish it to perfection.

Claude's context window and reasoning shine here. You get speed AND quality.

Feed Claude information gathered by other models.

Example:

You: "Gemini, find the latest React 19 features from official docs"

[Gemini returns structured list]

You: "Claude, here's what Gemini found. Now write a migration guide

for our team moving from React 18, considering we heavily use

class components and Redux."

Claude gets verified, current info + applies its superior reasoning to YOUR specific situation. This is when AI feels like actual magic.

Before you start throwing prompts around:

Stop thinking: "Which AI should I use?"

Start thinking: "Which AI combo solves this fastest?"

It's like cooking. You don't use only a knife OR only a pan. You use both, in sequence, to make something delicious. Same with AI models.

Will Definable make you 10x faster? Maybe. Depends if you're currently using AI like a Google replacement (tsk tsk).

Will you still write bad code? Absolutely. AI can't fix bad architecture decisions. But it can help you realize they're bad BEFORE you ship.

Is this overkill for small projects? Probably. If you're building a todo app, just use Claude. But if you're building anything production-grade with real stakes? Multi-model orchestration is a cheat code.

Will this replace learning to code? Nope. You still need to know enough to evaluate what the AI gives you. Garbage in, garbage out — across ALL models.

Using Claude alone is like having a genius on your team but refusing to let them collaborate with anyone else. It's artificial (pun intended) limitation.

Definable lets Claude be Claude — the thoughtful, context-aware reasoning engine — while other models handle their specialties. You get:

✅ Faster iteration (right model, right job)

✅ Higher quality (multi-model verification)

✅ More creativity (cross-pollination of ideas)

✅ Better decisions (compare, don't just accept)

The developers winning with AI aren't the ones using the "best" model. They're the ones using the best combination of models. They're orchestrating, not just prompting.

So stop using Claude solo. Start using Claude with its AI squad in Definable.

Your future self (and your code reviewers, and your sanity) will thank you.

Ready to join the multi-model revolution? Try Definable and see what Claude can really do when it's not working alone.